Last week I had the opportunity to speak at the third Mid-Atlantic JMP Users Group (MAJUG) conference, hosted by the University of Delaware in Newark, Delaware (UDaily). The opening remarks were given by the Dean of the College of Engineering, Michael Chajes, who used the theme of the event, "JMP as Catalyst for Engineering and Science", to emphasize that the government and the general public need to realize that science alone is not enough for the technological advances that are required in the future, we also need engineering. It was a nice lead to my opening slide, the quote by Theodore Von Karman:

Scientists discover the world that exists;

engineers create the world that never was.

I talked about some of the things that made Einstein famous (traveling on a beam of light, turning off gravity, mass being a form of energy), as well as some things that may not be well known. Do you know that he used statistics in his first published paper, Conclusions Drawn from the Phenomena of Capillarity (Annalen der Physik 4 (1901), 513-523)?

Einstein "started from the simple idea of attractive forces amongst the molecules", which allowed him to write the relative potential of two molecules, assuming they are the same, as a sum of all pair interactions P = P∞ - ½ c2 ∑∑φ(r). In this equation "c is a characteristic constant of the molecule, φ(r), however, is a function of the distance of the molecules, which is independent of the nature of the molecule". "In analogy with gravitational forces", he postulated that the constant c is an additive function of the number of atoms in the molecule; i.e., c =∑cα, giving a further simplification for the potential energy per unit volume as P = P∞ - K(∑cα)2/ν2. In order to study the validity of this relationship Einstein "took all the data from W. Ostwald's book on general chemistry", and determined the values of c for 17 different carbon (C), hydrogen (H), and oxygen (O) molecules.

It is interesting that not a single graph was provided in the paper. Had he had JMP he could have used my favorite tool, the graphics canvas of the graph builder, to display the relationship between the constant c and the number of atoms in each of the elements in the molecule.

We can clearly see that c increases linearly with the number of atoms in C and H (the last point, corresponding to the molecule with 16 hydrogen atoms, seems to fall off the line), but not so much with the number of oxygen atoms. We can also see that for the most part, the 17 molecules chosen have more hydrogen atoms than carbon atoms, and carbon atoms than oxygen atoms. This "look test", as my friend Jim Ford calls it, is the catalyst that can spark new discoveries, or the generation of new hypotheses about our data.

In order to find the fitting constant in the linear equation c =∑cα, he "used for the calculation of cα for C, H, and O [by] the least squares method". Einstein was clearly familiar with the method and, as Iglewicz (2007) puts it, this was "an early use of statistical arguments in support of a scientific proposition". These days it is very easy for us to fit a linear model using least squares. We just "add" the data to JMP and with a few clicks voilà, we get the "fitting constants", or parameter estimates as we statisticians call them, of our linear equation.

For a given molecule we can, using the parameter estimates from the above table, write the constant c = 48.05×#Carbon Atoms + 3.63×#Hydrogen Atoms + 45.56×#Oxygen Atoms.

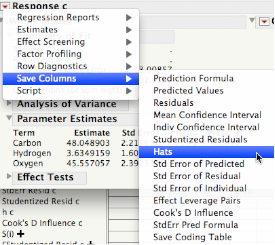

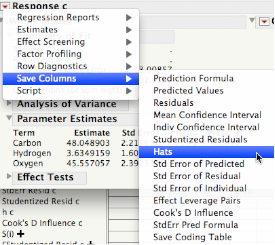

For young Einstein it was another story. Calculations had to be done by hand, and round off and arithmetic errors produced some mistakes. Iglewicz notes "simple arithmetic errors and clumsy data recordings, including use of the floor function rather than rounding". He also didn't have available the wealth of regression diagnostics that have been developed to assess the goodness of the least squares fit. JMP provides many of them within the "Save Columns" menu.

One the first plots we should look at is a plot of the studentized residuals vs. predicted values. Points that scatter around the zero line like "white noise" are an indication that the model has done a good job at extracting the signal from the data leaving behind just noise. For Einstein's data the studentized residuals plot clearly shows a no so "white noise" pattern, putting into question the adequacy of the model. Points beyond ±3 in the y axis, are a possible indication of response "outliers". Note that two points are more than 2 units away from 0 center line. One of them corresponds to the high hydrogen point that we saw in the graph builder plot.

In addition to the studentized residuals and predicted values there are two diagnostics, the Hats (leverage) and the Cook's D Influence, that are particularly useful for determining if a particular observation is influential on the fitted model. A high Hat value indicates a point that is distant from the center of the independent variables (X outliers), while a large Cook's D indicates an influential point, in the sense that excluding from the fit may cause changes in the parameter estimates.

A great way to combine the studentized residuals, the Hats, and the Cook's D is by means of a bubble plot. We plot the studentized residuals on the Y-axis, the Hats on the X-axis, and use the Cook's D to define the size of the bubble. We overlay a line at 0 in the Y-axis, to help the eye assess randomness and gauge distances from 0, and a line at 2×3/17 ≃ 0.35 in the X-axis, to assess how large the Hats are. Here 3 is the number of parameters or "fitting constants" in the model and 17 corresponds to the number of observations.

Right away we can see that molecule #1, Limonene, the one with the largest number of hydrogen atoms (16), is a possible outlier in both the Y (studentized residual=-3.02) and X (Hat=0.47), and also and influential observation (Cook's D=1.99). Molecule #16, Valeraldehyde, could also be an influential observation. What to do? One can fit the model without Limonene and Valeraldehyde to study their effect on the parameter estimates, or perhaps consider a different model.

In the final paragraph of his paper Einstein notes that "Finally, it is noteworthy that the constants cα in general increase with increasing atomic weight, but not always proportionally", and goes on to explain some of the questions that need further investigation, pointing out that ‘We also assume that the potential of the molecular forces is the same as if the matter were to be evenly distributed in space. This, however, is an assumption which we can expect to be only approximately true." Murrell and Grobert (2002) indicate that this is a very poor approximation because it "greatly overestimate the attractive forces" between the molecules.

For more on Einstein first published paper you can look at the papers by Murrell and Grobert (2002) and Iglewicz (2007), and Chapter 7 of our book Analyzing and Interpreting Continuos Data Using JMP where we analyze Einstein's data in more detail.

![]()