You are probably familiar with confidence intervals as a way to place bounds, with a given statistical confidence, on the parameters of a distribution. In my post I Am Confident That I Am 95% Confused!, I used the DC resistance data shown below to illustrate confidence intervals and the meaning of confidence.

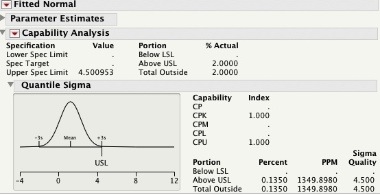

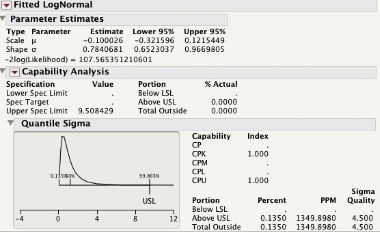

From the JMP output we can see that the average resistance for the sample of 40 cables is 49.94 Ohm and that, with 95% confidence, the average DC resistance can be as low as 49.31 Ohm, or as large as 50.57 Ohm. We can also compute a confidence interval for the standard deviation. That's right, even the estimate of noise has noise. This is easily done by selecting Confidence Interval > 0.95 from the contextual menu next to DC Resistance

We can say that, with 95% confidence, the DC resistance standard deviation can be as low as 1.61 Ohm, or as large as 2.52 Ohm.

Although confidence intervals have many uses in engineering and scientific applications, a lot of times we need bounds not on the distribution parameters but on an given proportion of the population. In her post, 3 Is The Magic Number, Brenda discussed why the mean ± 3×(standard deviation) formula is popular for setting specifications. For a Normal distribution, if we know the mean and standard deviation without error, we expect about 99.73% of the population to fall within mean ± 3×(standard deviation). In fact, for any distribution the Chebyshev's inequality guarantees that 88.89% of the population will be contained between ± 3×standard deviations. It gets better. If the distribution is unimodal we expect at least 95.06% of the population to be between ± 3×standard deviations.

In real applications, however, we seldom know the mean and standard deviation without error. These two parameters have to be estimated from a random and, usually small, representative sample. A tolerance interval is a statistical coverage interval that includes at least a given proportion of the population. This type of interval takes into account both the sample size and the noise in the estimates of the mean and standard deviation. For normally distributed data an approximate two-sided tolerance interval is given by

![]()

Here g(1-α/2,p,n) takes the place of the magic "3", and is a function of the confidence,1-α/2, the proportion that we want the interval to cover (you can think of this as the yield that we want to bracket), p, and the sample size n. These intervals are readily available in JMP by selecting Tolerance Interval within the Distribution platform, and specifying the confidence and the proportion of the population to be covered by the interval.

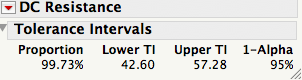

For the DC resistance data the mean ± 3×(standard deviation) interval is 49.94±3×1.96 = [44.06 Ohm;55.82 Ohm], while the 95% tolerance interval to cover at least 99.73% of the population is [42.60 Ohm;57.28 Ohm]. The tolerance interval is wider than the mean ± 3×(standard deviation) interval, because it accounts for the error in the estimates of the mean and standard deviation, and the small sample size. Here we use a coverage of 99.73% because this is what is expected between ± 3×standard deviations, if we knew the mean and sigma.

Tolerance intervals are a great practical tool because they can be used to set specification limits, as surrogate for specification limits, or to compare them to a given set of specification limits; e.g., from our customer, to see if we will be able to meet them.