A control or process behavior chart is commonly used to determine if the output for a process is in a "state of statistical control", i.e., it is stable or predictable. A fun exercise is to generate random noise, plot it on a control chart and then ask users to interpret what they see. The range of answers is as diverse as asking someone to interpret the meaning behind a surrealist painting by Salvador Dalí. As a case in point, take a look at the control chart below and determine if the output of this process is stable or not.

I suppose a few of you would recognize this as white noise, while others may see some interesting patterns. What about those 2 points that are close to the control limits? Is there more variation in the first half of the series than the second half? Is there a shift in the process mean in the second half of the series? Is there a cycle?

How can we take some of the subjectivity out of interpreting control charts? Western Electric rules are often recommended for assessing process stability. Certainly, this is more reliable than merely eyeballing it ourselves, we humans tend to see patterns when there are none, and they can provide us with important insights about our data. For instance, the same data is shown below with 4 runs tests turned on. We see that we have two violations in runs tests. Test 2 detects a shift in the process mean by looking for at least 8 points in a row falling on the same side of the center line; while Test 5 flags when at least 2 out of 3 successive points fall on the same side, and more than 2 sigma units away from the center line (Zone A or beyond). Does this mean our process output is unstable?

Remember, this data represents random noise. Some of you may be surprised that there are any violations in runs rules, but these are what we call 'false alarms'. Yes, even random data will occasionally violate runs rules with some expected frequency. False alarms add to the complexity of identifying truly unstable processes. Once again, how can we take some of the subjectivity out of interpreting control charts?

Method 1 and Method 2 to the rescue! In José's last post, he described 3 ways for computing the standard deviation. Recall, Method 1 uses all of the data to calculate a global estimate of the standard deviation using the formula for the sample standard deviation. Method 2, however, uses a local estimate of variation by averaging the subgroup ranges, or in this case, moving ranges, and dividing the overall range average by the scaling factor d2. When the process is stable, these two estimates will be close in value, and the ratio of their squared values (SR ratio) will be close to 1. If our process is unstable, then the standard deviation estimate from Method 1 will most likely be larger than than the estimate from Method 2, and the ratio of their squared values will be greater than 1.

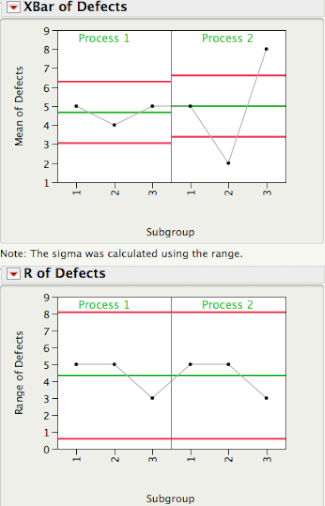

For the random data in the control chart shown above, the SR ratio = 1.672/1.622 = 1.06, which is close to 1, suggesting a stable process or in a state of statistical control. As a counterpoint, lets calculate the SR ratio for the control chart shown in my last post, which is reproduced below. The SR ratio = 2.352/0.442 = 28.52, which is way bigger than 1. This suggests an unstable process; however, in this case, it is due to the inappropriate control limits for this data.

The SR ratio is a very useful statistic to complement the visual assessment of the stability of a process. It also provides a consistent metric for classifying a process as stable or unstable and, in conjunction with the Cpk, can be used to assess the health of a process (more in a future post). For the two examples shown, it was easy to interpret the SR ratios of 1.06 and 28.52, which represent the two extremes of stability and instability. But what happens if we obtained an SR ratio of 1.5 or 2, is it close to 1 or not? For these situations, we need to obtain the p-value for the SR ratio and determine if it is statistically significant at a given significance level. To learn more about the SR ratio and other stability assessment criteria, see the paper I co-authored with Professor George Runger, Quantitive Assessment to Evaluate Process Stability.